Use SwiftUI and Machine Learning To Identify Nearly 1,000 Objects With Your Camera

Daily Coding Tip 084

Download the MobileNetV2 model from Apple’s CoreML website, and add it to a blank new iOS app project. This is a model that has already been trained to identify 999 objects which is, as the title suggests, nearly 1,000 objects.

Add an NSCameraUsageDescription row to your Info.plist.

This string could be anything but, if you release your app on the App Store, it needs to be an explanation of your need for camera access that Apple agrees with.

Most of the logic here involves extensions that make Apple’s frameworks easier to use. We’ll start by making it easy to create an AVCaptureVideoPreviewLayer as a sublayer of any UIView.

I have added a way to create an AVCaptureDeviceInput that uses the most obvious device, the rear camera of an iPhone.

Here’s another extension that makes it easier to work with AVCaptureSession.

It’s not really necessary to know what’s going on here, but we’re basically tidying away complex logic that won’t be taking up space in our DataModel or SwiftUI views.

The final extensions make it possible to load a CoreML machine learning model from file, like the MobileNetV2 model you should have already downloaded from Apple’s CoreML website and added to your project.

I have added a debug string here, which makes it easier to format the data that I will be displaying in my views.

Now we finally have our DataModel class.

This acts as the delegate for capturing the output from the camera and giving it to the Vision framework, which deals with comparing what it sees to the machine learning model. We are constructing a VNRequest by passing it a closure. That allows us to set the debugString in the DataModel to the debugString computed property that our extension added to VNClassificationObservation.

This is essentially a single result, as I am only concerned with the machine learning model’s best guess.

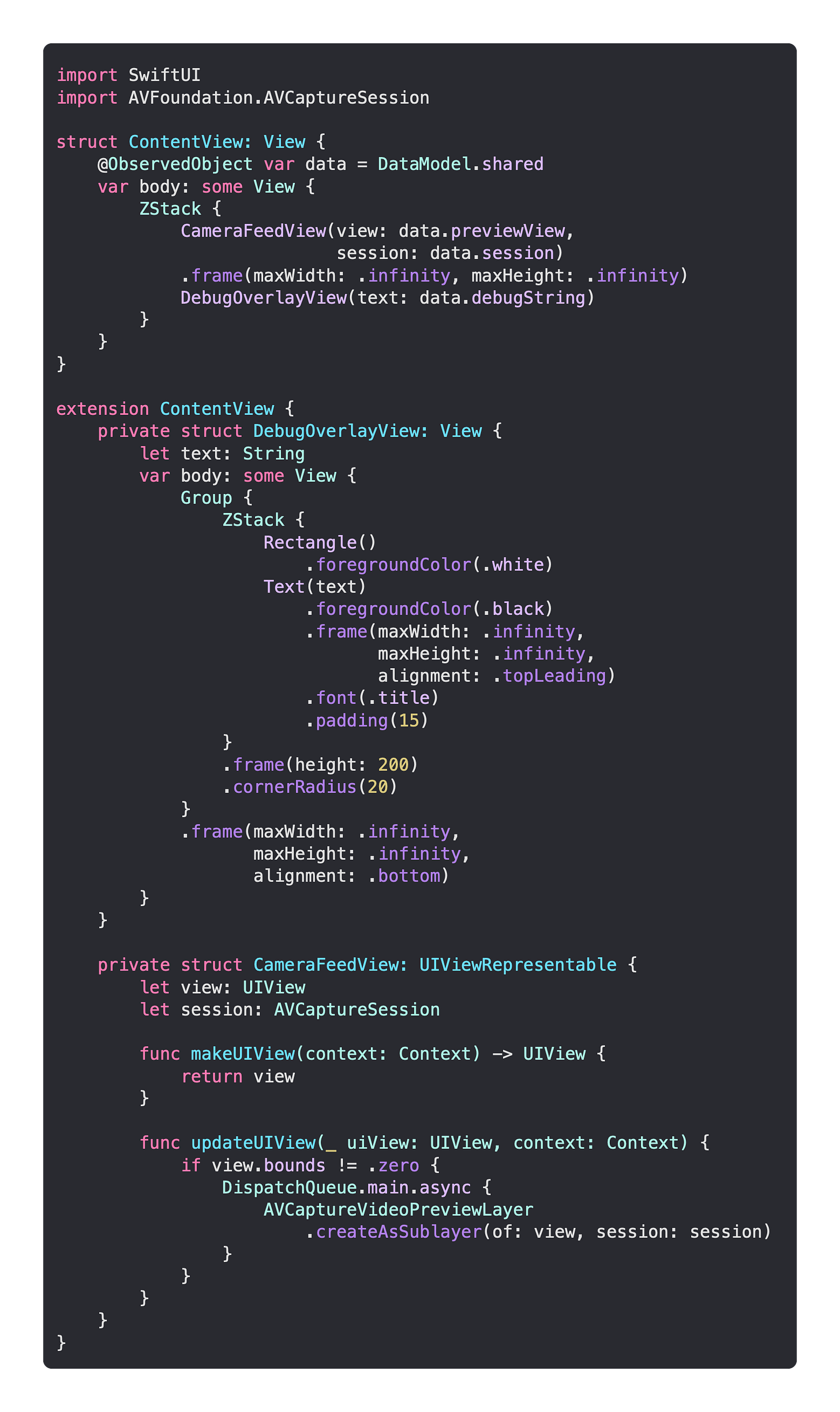

Now I’m ready to add the UI, which is made up of a simple ZStack of the camera feed and an overlay.

The overlay simply displays the debugString on a rounded background, telling you the level of confidence the model has and the objects it thinks it sees.